I am a performance requirement and this is my story. I just got built and accepted in the latest version of a Web-based SaaS (software as a service) application (my home!) that allows salespersons to search for businesses (about 14 million in number) and individuals (about 200 million in number) based on user-defined criteria, and then view the details of contacts from the search results. The application also allows subscribers to download the contact details for further follow-up.

I’m going to walk through my life in an agile environment—how I was conceived as an idea, grew up to become an acknowledged entity, and achieved my life’s purpose (getting nurtured in an application ever after). First a disclaimer – the steps described below do not exhaustively describe all the decisions taken around my life.

It all started about three months back. The previous version of the application was in production with about 30,000 North American subscribers. The agile team was looking to develop its newer version.

One of the strategic ideas that had been discussed quite in detail was to upgrade application’s user interface to a modern Web 2.0 implementation, using more interactive and engaging on-screen process flows and notifications. The proposed changes were primarily driven by business conditions, market trends and customer feedback. The management had a vision to capture a bigger pie of the market. The expectation was of adding 100,000 new subscribers in twelve months of release, all from North America. A big revenue opportunity! Because the changes were confined to its user interface, no one thought of potential impact on application performance. I was nowhere in the picture yet!

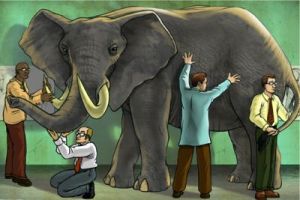

Due to the potential revenue impact of the user interface upgrade, the idea got moved high up in the application roadmap for immediate consideration. The idea became a user story that got moved from the application roadmap to the release backlog. Application owners, architects and other stakeholders started discussing the upgrade in more details. During one such meeting, someone asked the P-question—what about the performance? How will this change impact the performance of the application? It was agreed that performance expectations of the user-interface changes should clearly be captured in the release backlog. That’s when I was conceived. I was vaguely defined as – “As an application owner of the sales leads application, I want the application to scale and perform well to as many as 150,000 users so that new and existing subscribers are able to interact with the application with no perceived delays.”

During sprint -1 (discovery phase of the release planning sprint), I was picked up for further investigation and clearer definition. Different team members investigated the implications of me as an outcome. The application owner considered application usage growth for the next 3 years and came back with a revised peak number of users (300,000). The user interface designer built a prototype of the recommended user-interface changes; focusing on the most intensive transaction of the application – when a search criterion is changed the number-of-contacts-available counter on the screen need to get updated immediately. The architect tried to isolate possible bottlenecks in the network, database server, application server and Web server, due to the addition of more chatty Web components such as AJAX, JavaScript, etc. The IT person looked at the current utilization of the hardware in the data center to identify any possible bottlenecks and came back with a recommendation to cater to the expected increased usage. The lead performance tester identified the possible scenarios for performance testing the application. At the end of sprint -1, I was re-defined as – “As an application owner of the sales lead application, I want the application to scale and perform well to as many as 300,000 simultaneous users so that when subscribers change their search criteria, an updated count of leads available is refreshed within 2 seconds on the screen.” I was defined with more specificity now. But was I realistic and achievable?

During sprint 0 (design phase of the release planning sprint), I was picked up again to see the impact I would have on the application design. IT person realized that to support revised number of simultaneous users, additional servers will need to be purchased. Since that process is going to take a longer time, his recommendation was to scale the number of expected users back to 150,000. To the short time, user interface designer decided to limit the Web 2.0 translation to the search area of the application and puts the remaining functional stories in the product backlog. The architect made recommendations to modify the way some of the Web services were being invoked and on fine tuning some of the database queries. A detailed design diagram was presented to the team leads along with compliance guidelines. The lead performance tester focused on getting the staging area ready for me. I was re-shaped to – “As an application owner of the sales lead application, I want the application and perform well to as many as 150,000 simultaneous users so that when subscribers change their search criteria, an updated count of leads available is refreshed with 2 seconds on the screen.” I was now an INVESTed agile story, where INVEST stands for independent, negotiable, valuable, estimable, right-sized and testable.

During the agile sprint planning and execution phase; developers, QA testers and performance testers were all handed over all the requirements (including mine) for the sprint. While developers started making changes to the code for the search screen, QA testers got busy with writing test cases and performance testers finalized their testing scripts and scenarios. Builds were prepared every night and incremental changes were tested as soon as new code was available for testing. Both QA testers and performance testers worked closely with the developers to ensure functionality and performance were not compromised during the sprint. Daily scrums provided the much-needed feedback to the team so that everyone knew what was working and what was not. Lot of time was spent on me to ensure my 2-second requirement does not slip to 3-seconds, as it will have a direct impact on customer satisfaction. I felt quite important, sometimes even more than my cousin story of search screen user interface upgrade! At the end of a couple of 4-week sprints, the application was completely revamped with Web 2.0 enhancements with functionally and performance fully tested – ready to be released. I was ready!

Today, I will be deployed to the production environment. No major hiccups are expected, as during the last two weeks I was beta tested by some of our chosen customers on the staging environment. The customers were happy and so were internal stakeholders with the outcome. During these two weeks, I hardened myself and got ready to perform continuously and consistently. Even though my story is ending today, my elders have told me that I will always be a role model (baseline) for future performance stories to come. I will live forever in some shape or form!

that they can better connect and engage with those users. Online advertisers use various methods for reaching the right audience on the right places and at the right time. For example, they have used user’s online activities including browsing history, searches, social interactions and online purchases to uniquely understand online users and then present them with their product & services at relevant times and appropriate sites. Depending on the outcome of active discussions going on around Do-Not-Track (DNT), advertisers might have to lean towards other alternative ways to reach their desired audience – content targeting being one such option. Note that content targeting does not replace audience targeting and in fact, they complement each other very well.

that they can better connect and engage with those users. Online advertisers use various methods for reaching the right audience on the right places and at the right time. For example, they have used user’s online activities including browsing history, searches, social interactions and online purchases to uniquely understand online users and then present them with their product & services at relevant times and appropriate sites. Depending on the outcome of active discussions going on around Do-Not-Track (DNT), advertisers might have to lean towards other alternative ways to reach their desired audience – content targeting being one such option. Note that content targeting does not replace audience targeting and in fact, they complement each other very well.